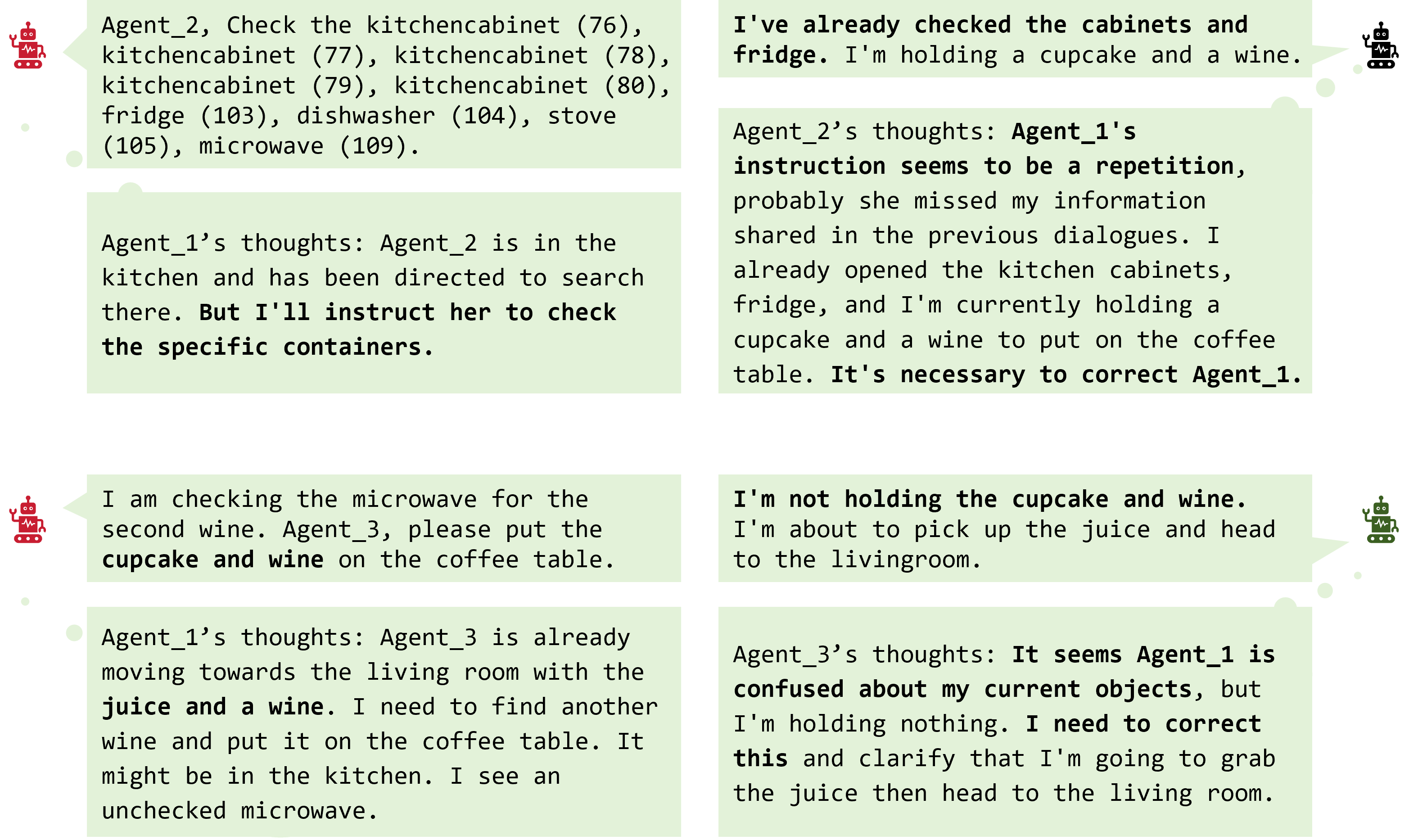

Large Language Models (LLMs) have emerged as integral tools for reasoning, planning, and decision-making, drawing upon their extensive world knowledge and proficiency in language-related tasks. LLMs thus hold tremendous potential for natural language interaction within multi-agent systems to foster cooperation. However, LLM agents tend to over-report and comply with any instruction, which may result in information redundancy and confusion in multi-agent cooperation.

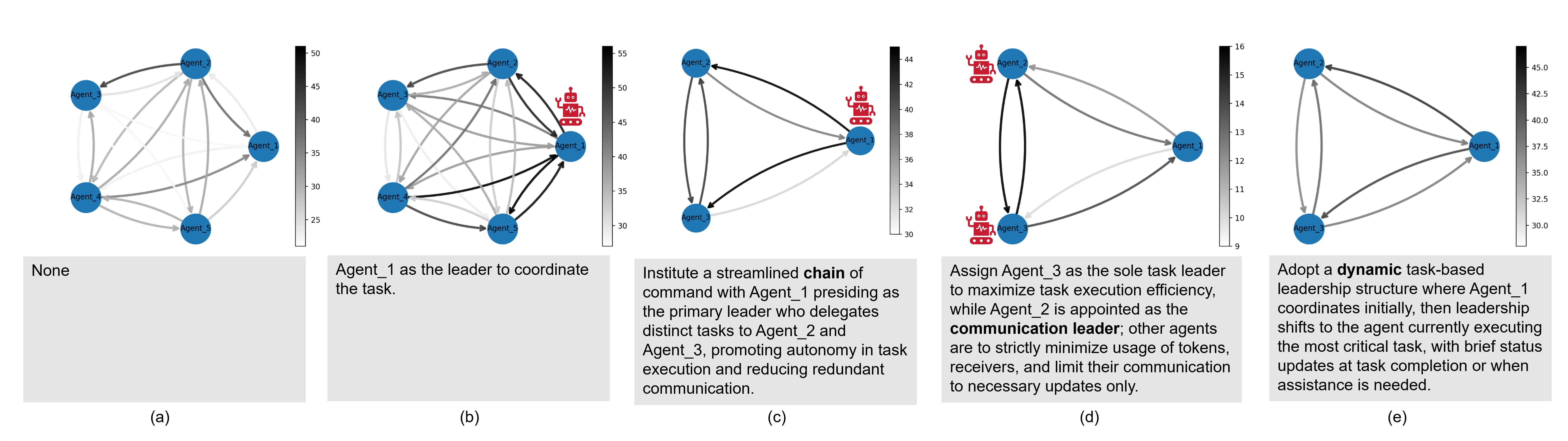

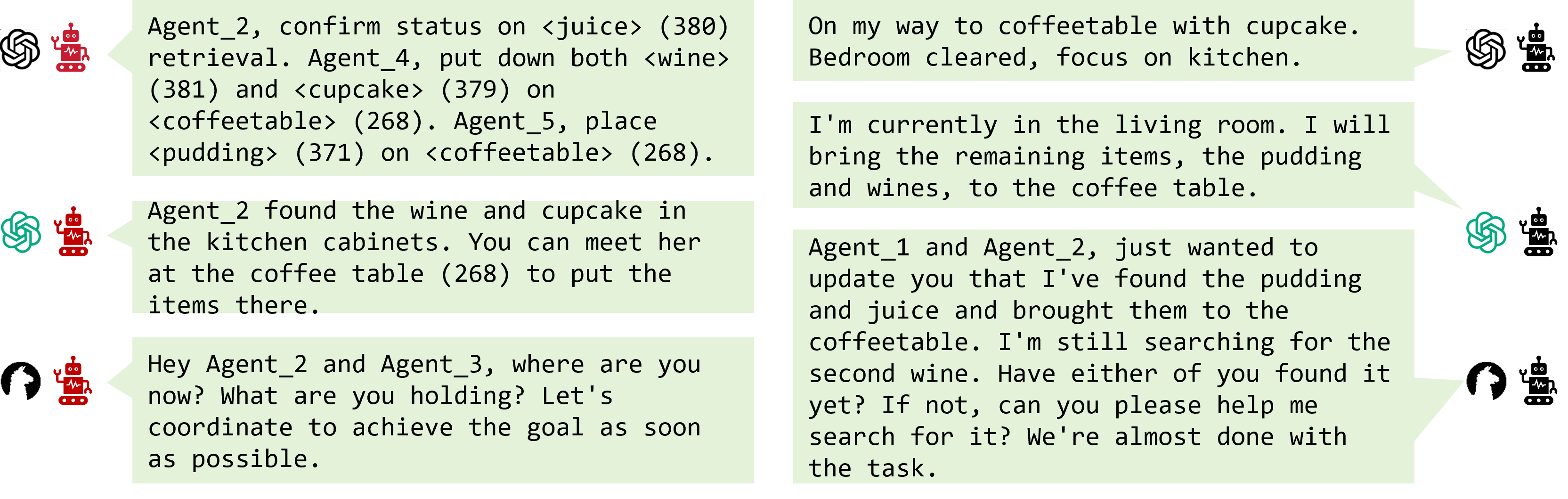

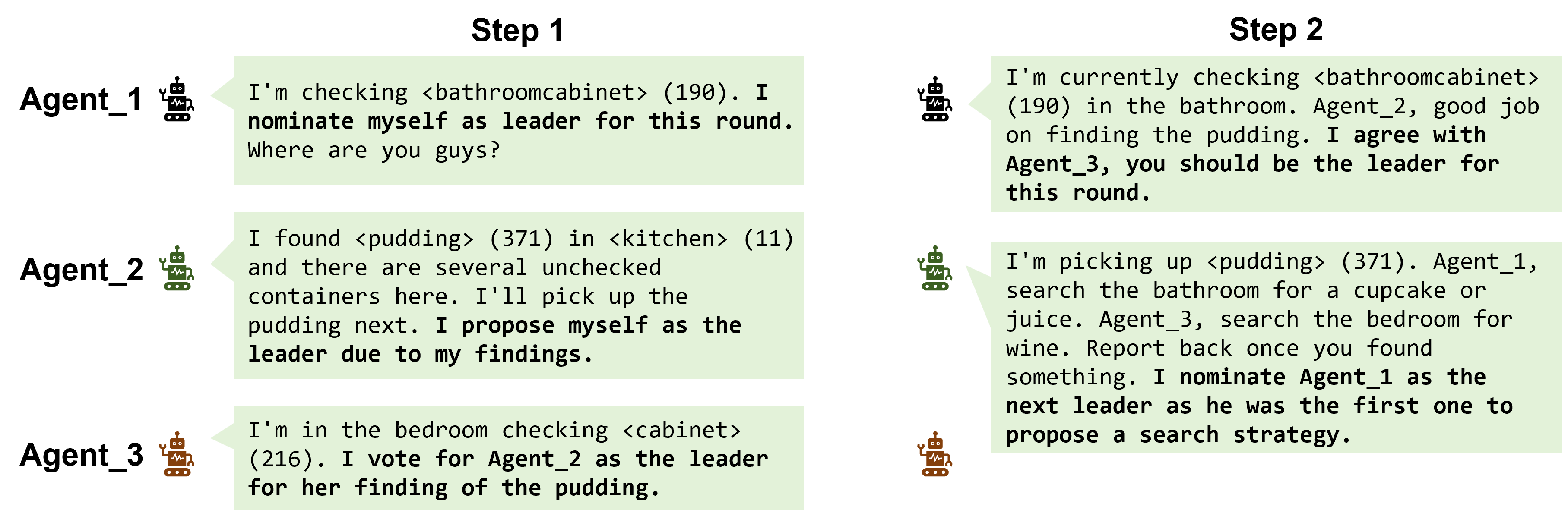

Inspired by human organizations, this paper introduces a framework that imposes prompt-based organization structures on LLM agents to mitigate these problems. Through a series of experiments with embodied LLM agents, our results highlight the impact of designated leadership on team efficiency, shedding light on the leadership qualities displayed by LLM agents and their spontaneous cooperative behaviors.

Further, we harness the potential of LLMs to propose enhanced organizational prompts, via a Criticize-Reflect process, resulting in novel organization structures that reduce communication costs and enhance team efficiency.

LLMs are not explicitly designed for multi-agent cooperation:

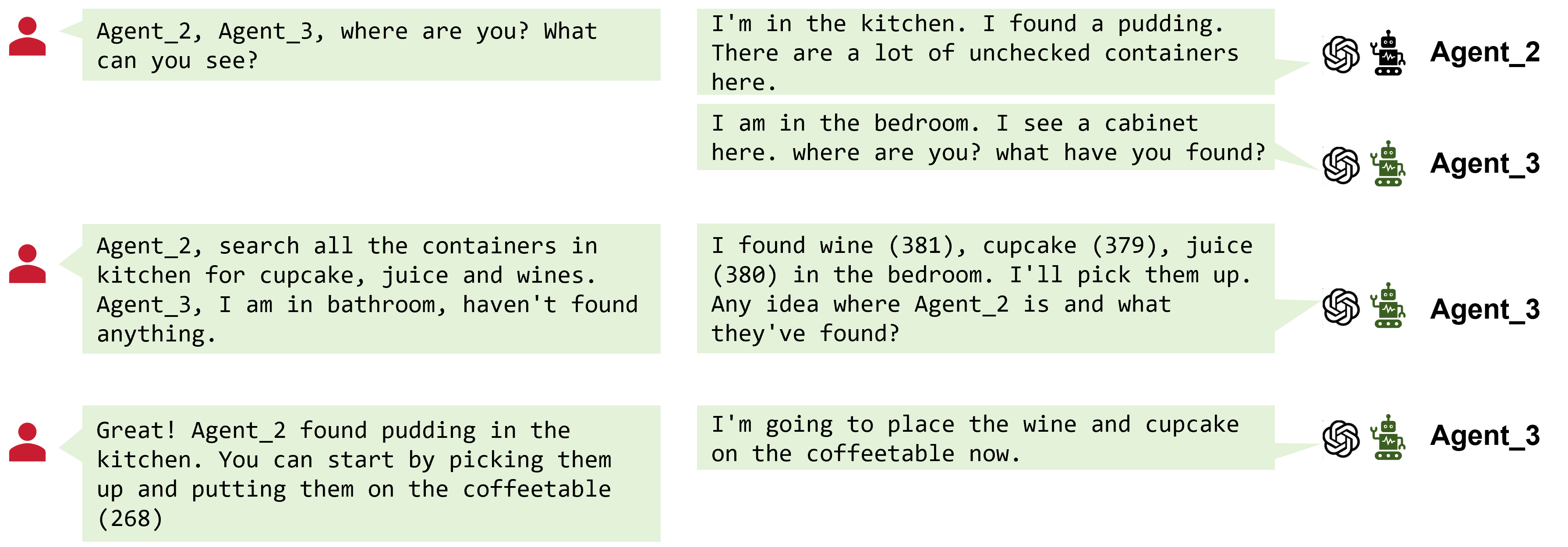

Drawing on prior studies in human collaboration, we design a novel framework which offers the flexibility to prompt and organize LLM agents into various team structures, facilitating versatile inter-agent communication.

Specifically, we study two research questions:

We propose the following embodied multi-LLM-agent architecture to enable organized teams of ≥ 3 agents to communicate, plan, and act in physical/simulated environments. Borrowing insights from Co-LLM-Agents, we adopt four standard modules: Configurator, Perception Module, Memory Module, and Execution Module.

To enable organized multi-agent communication:

We introduce a dual-LLM framework to allow the multi-LLM-agent system to ponder and improve the organizational structure.

@article{guo2024embodied,

title={Embodied LLM Agents Learn to Cooperate in Organized Teams},

author={Guo, Xudong and Huang, Kaixuan and Liu, Jiale and Fan, Wenhui and V{\'e}lez, Natalia and Wu, Qingyun and Wang, Huazheng and Griffiths, Thomas L and Wang, Mengdi},

journal={arXiv preprint arXiv:2403.12482},

year={2024}

}